National

AI viral image trends are not all fun and games

As the use of generative AI grows in Nepal, so do the forms of abuse this new technology has unleashed.

Aarati Ray

In March, when the Ghibli filter trend was all the rage online, Suchita’s inbox was filled with cute, animated versions of her group photos with friends.

Suchita, 16, was enjoying those quirky photos until her ex sent one that didn’t feel right.

“It was a picture of us in our school uniforms,” said Suchita, who the Post is identifying with a pseudonym for privacy reasons. “But the AI version was manipulated to show me in a sexualised and inappropriate manner.”

When Suchita confronted her ex, he shrugged it off. “It’s just a cartoon,” he said, adding she couldn’t even prove it was her. Alarmed, she blocked him. Weeks later, Suchita keeps getting haunted by the incident.

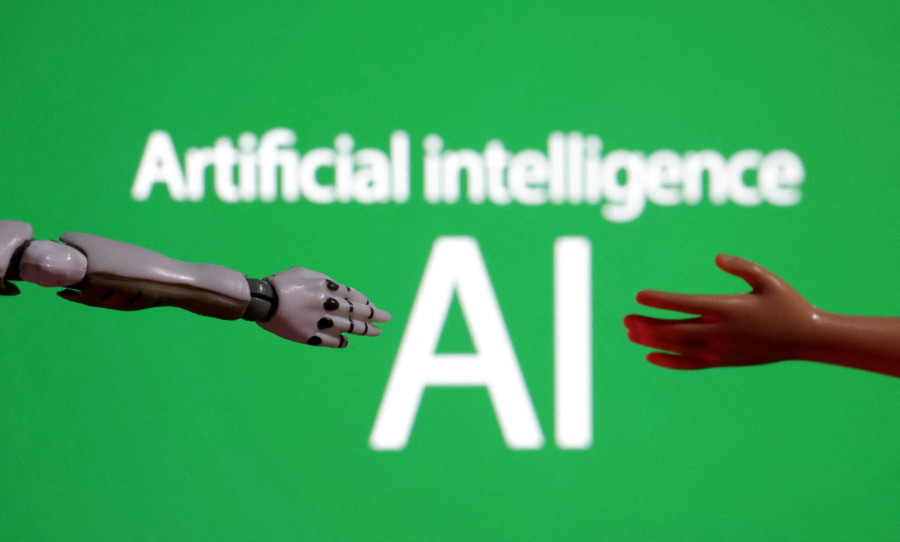

Over the past month, AI-powered image trends have dominated social media like never before. March’s social media feeds were swept up in a hurricane of dreamy, AI-generated images modelled after the filmmaking of Studio Ghibli, the Japanese animation studio.

By April, the trend shifted to users turning themselves into plastic-perfect action figures, complete with custom outfits and packaging inspired by brands like Barbie.

Then came the pets turned into people. Upload a photo of your dog, and AI returns a startlingly lifelike human version of the canine.

Beyond these quirky features, newer generative AI models like GPT-4o are gaining traction, especially among digital-native teens and children, by addressing earlier flaws such as distorted hands and garbled text.

As AI use grows, so have the forms of abuse this brave new technology has unleashed.

As of the first week of April, Nepal Police have recorded 525 cybercrime cases involving children this fiscal year, including online sexual offences such as receiving calls and messages of sexual nature, inappropriate photos, links, and photo mutilation. Photo mutilation, driven by AI, is the most common issue across all age groups, especially girls and women.

Experts caution that the features driving generative AI’s popularity, such as instant edits and viral sharing, also make it highly exploitable, as seen in Suchita’s case.

With children and teens at the forefront, AI-driven photo mutilation and child sexual abuse material (CSAM) cases could rise. The growing trend of turning real images into cartoon or anime-style content may also make CSAM harder to detect and prevent.

The biggest concern with these AI trends like ‘Ghibli-fy’ is privacy, says Anil Raghuvanshi, founder and president of ChildSafeNet, an NGO advocating for safer internet for children.

“When we upload images to ChatGPT or other AI tools, the data is stored and often used as training material,” he says. “This means our photos can remain in AI systems indefinitely and be repurposed for future image generation, videos, or animations.”

The larger the dataset, the more realistic the outputs. And the collected data can be misused by bad actors, Raghuvanshi says.

For instance, a July 2024 report by the Internet Watch Foundation (IWF) shows a surge in AI-generated CSAM. Over 3,500 new AI-made abuse images appeared on dark web forums, many depicting severe abuse.

According to the report, perpetrators are even using fine-tuned AI to recreate images of known victims and famous children.

While the majority of CSAM is produced and stored overseas, its digital nature knows no borders.

A 2024 study by ChildSafeNet and UNICEF found that 68 percent of Nepali respondents across all age groups were aware of generative AI, with 46 percent actively using it. Of these, more than half (52 percent) preferred ChatGPT.

The report also raised serious concerns about the risks that generative AI poses to children, like exposure to harmful content like CSAM, privacy breaches and cyberbullying.

According to Raghuvanshi, while social media platforms and apps can detect nudity and child sexual abuse in real images, they struggle with AI-generated content like Ghibli-style or cartoon images. These images can be used to distribute CSAM and bypass detection tools.

Law enforcement will now need to address challenges like identifying whether CSAM content features a real child—if AI has been used to conceal a child’s identity—or if the depiction is of a real act, making detection complicated.

“Even when photos are maliciously altered, they could be dismissed as just a cartoon, making it harder to prove the image shows an actual victim,” says Raghuvanshi. “This dangerous normalisation of synthetic sexual content could fuel a rise in sextortion and photo mutilation cases.”

Another risk is the potential for peer bullying through fake images, says researcher and technologist Shreyasha Paudel.

Paudel says while creating fake images is not new, the difference with generative AI is that it can be done with just a click and no specialised skills. “The current social media environment and AI companies incentivise the spread of such images,” she adds.

Paudel’s concern is not unfounded.

In the fiscal year 2023-24, the Cyber Bureau recorded 635 cases of cyber violence involving children, a 260.8 percent increase from 176 cases in 2022-23. This fiscal year, the bureau has recorded 525 cases as of early April.

In fact, the bureau has repeatedly released statements addressing the rise in character assassination, and the creation and spread of obscene content, all linked to social media and AI use.

Experts say that while governments and NGOs often focus on detecting and preventing CSAM through surveillance, new features of AI show that this approach alone can’t suffice.

“Detecting and preventing CSAM in animated or cartoon forms will be difficult as we lack a full understanding of how generative AI creates these images,” said Paudel. “So we can’t yet develop an infallible AI detector for AI-manipulated CSAM.”

She warns that in many countries, the threat of CSAM has been misused to justify increased surveillance and censorship, leading to arrests of activists, journalists, and minorities while CSAM continues to exist illegally.

“Given the state of the technology, the focus should shift from censorship to accountability, empowerment, and awareness on the pros, cons and ethics of AI use,” says Paudel.

Raghuvanshi seconds Paudel. “Nepal urgently needs updated cybercrime laws to address AI-generated CSAM, including mechanisms to handle cases involving manipulated cartoon or anime-style images,” he said.

Meanwhile, neighbouring China is taking proactive steps. Beijing has introduced AI training, including AI ethics, into elementary school curricula. Starting this September, China plans to integrate AI applications across classrooms. Other countries like Estonia, Canada, and South Korea are also embedding AI education into their school systems.

By contrast, Nepal is lagging behind, with only a draft AI policy in place and a 17-year-old Electronic Transactions Act that no longer addresses the complexities of today’s digital environment. School curricula lack good material on digital safety and internet use, let alone AI, which is in widespread use among children and teenagers.

Paudel suggests that instead of treating the internet and AI as threats, parents, teachers, and children should explore them together as learning tools. “Guiding children to seek adult support and creating a safe, trusting environment where they can communicate should be the priority of the government, civil society, business, and education sectors,” Paudel says.

13.93°C Kathmandu

13.93°C Kathmandu